|

|

||

|---|---|---|

| assets | ||

| cookbook | ||

| data/PaulGrahamEssaysLarge | ||

| docs | ||

| pocketflow | ||

| tests | ||

| .gitignore | ||

| LICENSE | ||

| README.md | ||

| setup.py | ||

README.md

Pocket Flow - LLM Framework in 100 Lines

A 100-line minimalist LLM framework for (Multi-)Agents, Prompt Chaining, RAG, etc.

-

Install via

pip install pocketflow, or just copy the source codes (only 100 lines) -

If the 100 lines feel terse and you’d prefer a friendlier intro, check this out

-

💡 Pro tip!! Build LLM apps with LLMs assistants (ChatGPT, Claude, Cursor.ai, etc.)

(🫵 Click to expand) Use Claude to build LLM apps

-

Set project custom instructions. For example:

1. check "tool.md" and "llm.md" for the required functions. 2. design the high-level (batch) flow and nodes in artifact using mermaid 3. design the shared memory structure: define its fields, data structures, and how they will be updated. Think out aloud for above first and ask users if your design makes sense. 4. Finally, implement. Start with simple, minimalistic codes without, for example, typing. Write the codes in artifact. -

Ask it to build LLM apps (Sonnet 3.5 strongly recommended)!

Help me build a chatbot based on a directory of PDFs.

(🫵 Click to expand) Use ChatGPT to build LLM apps

-

Try the GPT assistant. However, it uses older models, which are good for explaining but not that good at coding.

-

For stronger coding capabilities, consider sending the docs to more advanced models like O1.

-

Paste the docs link (https://github.com/the-pocket/PocketFlow/tree/main/docs) to Gitingest.

-

Then, paste the generated contents into your O1 prompt, and ask it to build LLM apps.

-

Documentation: https://the-pocket.github.io/PocketFlow/

Why Pocket Flow?

Pocket Flow is designed to be the framework used by LLMs. In the future, LLM projects will be self-programmed by LLMs themselves: Users specify requirements, and LLMs will design, build, and maintain. Current LLMs are:

-

👍 Good at Low-level Details: LLMs can handle details like wrappers, tools, and prompts, which don't belong in a framework. Current frameworks are over-engineered, making them hard for humans (and LLMs) to maintain.

-

👎 Bad at High-level Paradigms: While paradigms like MapReduce, Task Decomposition, and Agents are powerful, LLMs still struggle to design them elegantly. These high-level concepts should be emphasized in frameworks.

The ideal framework for LLMs should (1) strip away low-level implementation details, and (2) keep high-level programming paradigms. Hence, we provide this minimal (100-line) framework that allows LLMs to focus on what matters.

Pocket Flow is also a learning resource, as current frameworks abstract too much away.

| Framework | Computation Models | Communication Models | App-Specific Models | Vendor-Specific Models | Lines Of Codes | Package + Dependency Size |

|---|---|---|---|---|---|---|

| LangChain | Agent, Chain | Message | Many | Many | 405K | +166MB |

| CrewAI | Agent, Chain | Message, Shared | Many | Many | 18K | +173MB |

| SmolAgent | Agent | Message | Some | Some | 8K | +198MB |

| LangGraph | Agent, Graph | Message, Shared | Some | Some | 37K | +51MB |

| AutoGen | Agent | Message | Some | Many | 7K | +26MB |

| PocketFlow | Graph | Shared | None | None | 100 | +56KB |

How Does it Work?

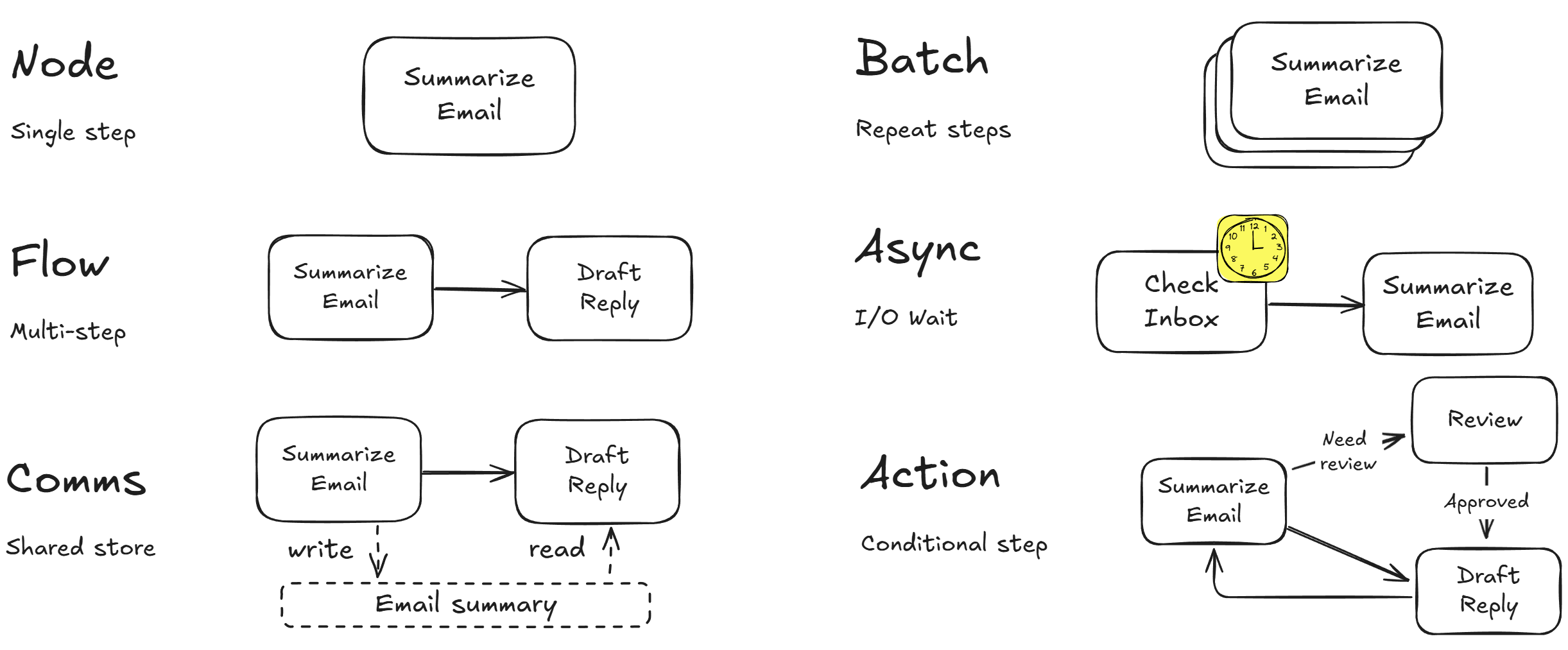

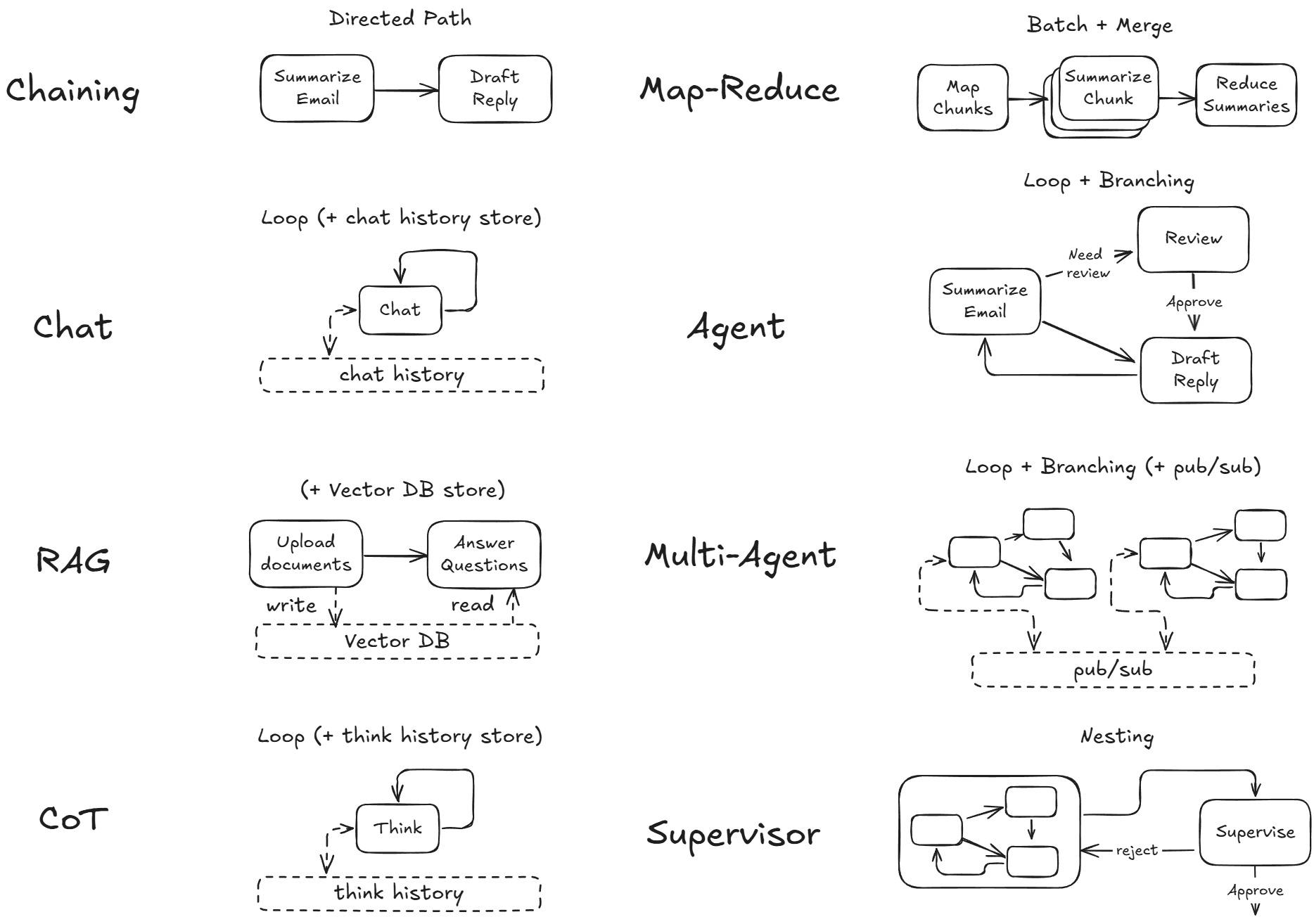

The 100 lines capture what we see as the core abstraction of most LLM frameworks: Nested Directed Graph that breaks down tasks into multiple (LLM) steps, with branching and recursion for agent-like decision-making.

From there, it’s easy to layer on more complex features like (Multi-)Agents, Prompt Chaining, RAG, etc.

-

To learn more details, please check out documentation: https://the-pocket.github.io/PocketFlow/

-

Beginner Tutorial: Text summarization for Paul Graham Essay + QA agent

-

More coming soon ... Let us know you’d love to see!